The title of CS 685 is “Machine Learning” which doesn’t show the actual content of this course… However, the title of CS 485 reveals the actual content: “Statistical and Computational Foundations of Machine Learning”. In my opinion, this course is more like a statistical learning theory, not machine learning, because machine learning is more like CS 480 and so on, which just does some magic… Also, Shai’s 245 covers Kolmogorov complexity, which has neat application in this course, though he mentioned in CS 245 (winter 2021), not in CS 485.

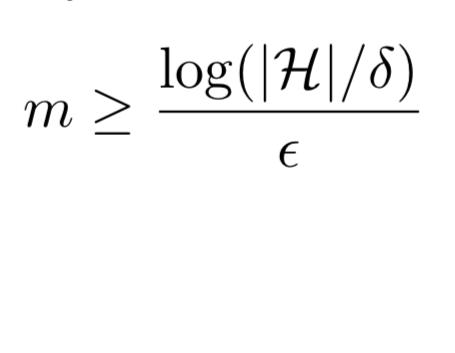

These notes cover the theoretical foundations of machine learning. Topics include PAC learning, empirical risk minimization, the bias-complexity tradeoff, VC dimension and the fundamental theorem of PAC learning, Sauer’s lemma, nonuniform learnability, minimum description length, computational complexity of learning, and linear predictors (halfspaces, perceptron).